Enable the processing of event coming from <geosys/> to fetch, format and publish analytic on an Azure storage account.

Who we are

Project description

·

Report Bug

·

Request Feature

Table of Contents

EarthDaily Agro is the agricultural analysis division of EartDaily Analytics. Learn more about Earth Daily at EarthDaily Analytics | Satellite imagery & data for agriculture, insurance, surveillance. EarthDaily Agro uses satellite imaging to provide advanced analytics to mitigate risk and increase efficiencies – leading to more sustainable outcomes for the organizations and people who feed the planet.

Throught our <geosys/> platform, we make geospatial analytics easily accessible for you to be browsed or analyzed, within our cloud or within your own environment. We provide developers and data scientists both flexibility and extensibility with analytic ready data and digital agriculture ready development blocks. We empower your team to enrich your systems with information at the field, regional or continent level via our API or Apps.

We have a team of experts around the world that understand local crops and ag industry, as well as advanced analytics to support your business.

We have established a developer community to provide you with plug-ins and integrations to be able to discover, request and use aggregate imagery products based on Landsat, Sentinel, Modis and many other open and commercial satellite sensors.

The current project aims to provide an easy and ready to use event consumer and analytic formater allowing customer to quickly initializze and maintain up to date field level analytic derived from satelite imagery.

Use of this project requires valids credentials from the <geosys/> platform . If you need to get trial access, please register here. This project is using .Net Core 6.0

To start replicate curent project and launch it using your code editor.

Update teh appseettings file:

"Azure": {

"BlobStorage": {

"ConnectionString": "MyAzureBlobStorageConnectionString" <- Put the connection string to your Microsoft Storage account }

},

"IdentityServer": {

"Url": "IdentityServerUrl", -> On veut target la préprod ou/et la prod ???

"TokenEndPoint": "connect/token",

"UserLogin": "myuser", <- Set the user login get from your trial access

"mypassword", <- Set the password get from your trial access here

"ClientId": "myclientid", <- Set the clientId get from your trial access here

"myclientsecret", <- Set the clientSecret get from your trial access here

"Scope": "openid offline_access", "GrantType": "password"

},

"MapProduct": {

"Url": "MapProductUrl"

}

Finally build and deploy host this web project in your infrastructure.

Contact Earthdaily Agro customer desk to share your formater URL. We will configure our notification pipeline to publish event to your URL.

Each event from this stream is tied to a field and a new analytic becoming availabe (clear image or other analytic generated for this field). To enable analytic event publication, each field of interest has to be subsribed. This can we done using subscription API:

- for historical analytics using

POST 'http://<root>/analytics-sink-subscriptions/v1/user-sink-entity-replays/query' \

--header 'Content-Type: application/json' \

--header 'Accept: */*' \

--header 'Authorization: Bearer _Token_' \

--data-raw '{

"entity": {

"id": "_SeasonField_id_",

"type": "SeasonField"

},

"user": {

"id": "_user_unique_id_"

},

"schema": {

"id": "_SCHEMA_CODE_"

}

}'

With this subcription, an event will be generated for each analytic corresponding to the Schema and tied to the field. This subcription will not trigger any analytic generation.

- for real time analytics using

POST 'http://<root>/analytics-sink-subscriptions/v1//user-sink-subscriptions' \

--header 'Content-Type: application/json' \

--header 'Accept: */*' \

--header 'Authorization: Bearer _Token_' \

--data-raw '{

"entity": {

"id": "_SeasonField_id_",

"type": "SeasonField"

},

"user": {

"id": "_user_unique_id_"

},

"schema": {

"id": "_SCHEMA_CODE_"

},

"streaming": {

"enabled": true

}

}'

With this subcription, an event will be generated once a new analytic corresponding to the Schema and tied to the field is generated. This subcription will not trigger any analytic generation, it is publishing a notification event based on an internal generation event.

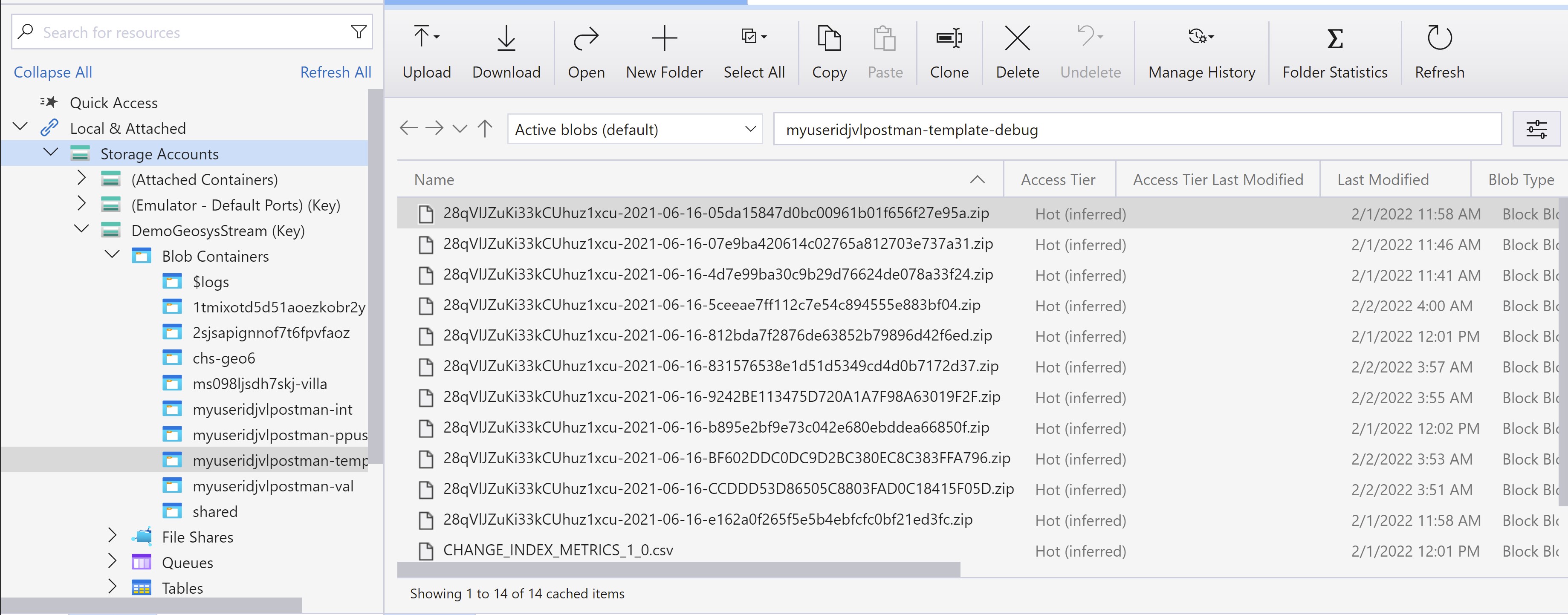

The Analytic Stream Formater will for each notification, fecth the analytic:

- an NDVI map as a Geotiff zipped file

- a change index json object

Each analytic will be published in a storage acccount configured. Each NDVI map will be a specific asset whereas change index values will be consolidated into a CSV file.

The naming convention is <GeosysSeasonFieldID-yyyy-mm-dd-.zip

If you want to manage the integration of notification inside your platform, manage its persistence, you can update or implement your own version of the IAnalyticsService.

To change the map type extracted (Geotiff NDVI), please update or implement

We also provide consulting services in case you need support to create your own analytic pipeline.

The Analytic stream Formatter can be used to initalize innovation project field level dataset. This will allow you to fectch 10+ years of clear maps on fields of interest.

The Analytic stream Formatter is the perfect app to create you own field level map pipeline allowing you to receive fresh and direclty usable field level analytic as they become available.

The following links will provide access to more information:

If this project has been useful, that it helped you or your business to save precious time, don't hesitate to give it a star.

Distributed under the GPL 3.0 License.

For any additonal information, please email us

© 2022 Geosys Holdings ULC, an Antarctica Capital portfolio company | All Rights Reserved.